Research Spotlight: Karthik Gururangan

Karthik Gururangan is a senior Ph.D. student in the lab of Professor Piotr Piecuch. He completed his undergraduate studies at the University of California, Berkeley, and received his Masters of Science in Materials Science from Northwestern University. He joined MSU’s Department of Chemistry in 2019.

Could you speak a bit about the research the Piecuch Group pursues?

Our research group primarily works in the fields of electronic structure theory and quantum chemistry, which are a branches of science that deal with understanding and computing the ground and excited states of quantum many-electron systems. This subject is highly relevant to understanding the behaviors of molecular and solid-state systems, especially in chemically relevant contexts, including reactions, bonding, and spectroscopy, where you have accurately described the quantum mechanical behavior of many electrons.

Even when you’re talking about a single molecule, for instance, you might be considering tens or even hundreds of electrons, all of which are interacting with each other, so this very quickly becomes an intractable problem.

In quantum chemistry, the goal is to come up with useful and practical approximations to deal with this problem in a feasible way. In the Piecuch research group, we focus a lot on developing approaches using the coupled-cluster theory, which takes advantage of the exponential structure of the ground-state many-body wave function. When you exploit this structure, you open an entire field of very accurate and robust ways of tackling the quantum many-body problem.

And what originally drew you to this area of chemistry?

I got involved in quantum chemistry research in a sort of nonlinear way. I started out studying material science, which deals with understanding and engineering the properties of materials, such as mechanical, chemical, electronic, optical, and magnetic properties. It soon becomes very clear that a lot of these properties originate from the electronic structure of the atoms and molecules that comprise the material.

For instance, if you want to know the stiffness of a metal, you need to understand the stiffness of its chemical bonds, which is a direct consequence of the electronic structure. Similarly, if you want to know why something is transparent, you need to understand the energies of permissible transitions between electronic states.

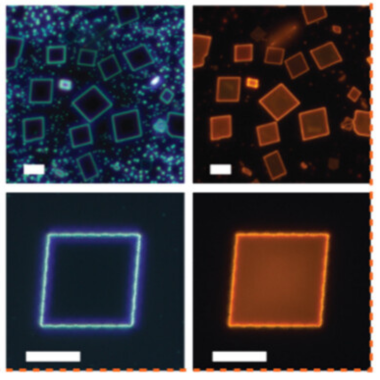

Later on, during my masters, I worked in the spectroscopy group of Professor Elad Harel. In his laboratory, we were trying to understand the interplay between electronic and vibrational molecular states during photophysical processes, so it’s very much the language of quantum chemistry, and that probably sealed the deal for me. I felt that electronic structure theory and quantum chemistry were subjects that explained a lot of everyday things around us but in a way that is highly mathematical, and I think I found that somewhat alluring.

You recently attended the 54th Midwest Theoretical Chemistry Conference hosted by UW-Madison where you took home the award for best student talk (congratulations!) Could you touch on what you discussed in this presentation?

I was talking about a new way of obtaining high-level coupled-cluster energetics that avoids doing most of the computational work. The approach is based on the framework of biorthogonal CC(P;Q) moment expansions developed in the Piecuch group in 2012.

The version of it that I presented, which we call adaptive CC(P;Q), exploits the mathematical structure of the CC(P;Q) moment expansions to help us identify what’s most important and what’s least important in the problem. By treating the important parts in your computations accurately, and dealing with the remainder in a cheap way, you can obtain very accurate results at a reduced cost, and based on the data we have collected using adaptive CC(P;Q) to date, we feel that it is a very promising approach.

We say it’s ‘adaptive’ in the sense that you’re actively learning what you should include in your computations. It’s a black-box way of building your coupled-cluster wave function on the fly — you just click a button, it grows the wave function for you, and it stops when it it’s converged. With this approach, you’re doing the minimum amount of work needed to solve the problem and no more and we can get very accurate coupled-cluster energies in minutes rather than hours, for example. This line of thinking is very common in quantum chemistry and many modern approaches that people work on seek to exploit sparsity in one way or another.

Your group’s software is open-sourced and widely shared. How do you see your research in relation to the larger scientific community?

Adaptive CC(P;Q) is just one way of doing accurate quantum chemistry in a faster and more convenient way. The fact that it’s black-box means that it is easy to use for researchers in our field, and with any luck, people outside of the field may even try to use it. That’s where I feel the open-source software aspect comes into it.

We’re always interested in developing new methods and testing them ourselves, but what you really want is for other people to adopt them. Having your method become a workhorse tool for other computational or experimental chemists says more about its success than any paper could. One of the best ways to start this process, especially these days, is to take your code, write it in a way that’s reasonable, and share it online via GitHub. This is the motivation behind our group’s newest package for performing coupled-cluster calculations called CCpy. It’s a one-stop-shop for a lot of conventional and unconventional coupled-cluster methods and it’s a vehicle for communicating our advances in a way that encourages others to not just read a paper, but actually test out these new methods for themselves.

Currently, it seems that CCpy has some visibility in our field. I’ve run into people at conferences who have heard of it and even tried it out with positive results. It has also led to unexpected connections – for instance, I am currently working on a project in relativistic quantum chemistry with Professor Achintya Dutta at IIT Bombay who contacted me for a collaboration after seeing our code online.

Overall, I think that CCpy could pick up traction because it includes methods that are found nowhere else, and it’s a living code that we are constantly updating and making better. In time, I think we could turn it into a competitive alternative to more established codes that researchers can use in practical applications.

Python as a coding language is also very user-friendly and the past several generations of students and researchers have strongly adopted it. It has a natural syntax and a “sandbox” feel that encourages users to examine, modify, and extend the source code. Although the computationally critical kernels in CCpy are written in Fortran, the user works in Python. Our group already has a long history of disseminating our codes through the freely available and widely used GAMESS package, which is one of the venerable electronic structure codes that was started a few decades ago. It felt very appropriate to continue this tradition by sharing our group’s coupled-cluster methods in this modern, GitHub-based open-source arena through CCpy.

Looking ahead, what are some computational hurdles or questions you’re excited to be addressing with your research?

A big-picture theme in this field is that accurate quantum chemistry comes with enormous computational costs, and reducing these costs while maintaining accuracy is a focal point of cutting-edge research. People tend to go about this problem in two different ways. You can either examine the problem from a theoretical perspective to suggest new methods or algorithms to save costs, say by including expensive terms in a cheaper way, or you approach the problem from a high-performance computing viewpoint by implementing existing theories in a way that extracts every ounce of performance out of modern hardware. Ideally, we could do both.

Interestingly, there is an underlying tension between certain categories of modern quantum chemistry methods, including adaptive CC(P;Q), which operate on the basis of exploiting sparsity in the problem, and current high-performance computing architectures, which are based on massively parallel GPU systems. GPUs are great for dense computation, but they are not designed to take advantage of sparsity. As a result, offloading adaptive CC(P;Q) or any other method like it to a GPU poses a number of technical challenges that would most likely kill the performance gains.

I’m curious how, or if, we can overcome these challenges to combine sparse quantum chemical computations of this type with the extremely high-performance GPU computing we’re seeing everywhere now. With this, you might see high-level quantum chemistry methods like adaptive CC(P;Q) applied to a range of new and serious questions, say, in drug discovery or material design.

Related to this, I remember taking a machine learning class at Northwestern and in the instructor explaining, maybe tongue-in-cheek, that the reason we have powerful neural net models trained on armies of GPUs is because my generation played a lot of video games growing up, and we demanded high-quality graphics. Graphics are just vector operations and modern GPUs are a result of this “form meets function” cycle. Maybe the sparse framework pursued by modern quantum chemistry approaches requires a different type of chip that relaxes the focus on rectilinear, dense memory layouts, thereby tailoring the physical hardware to the type of required computation, rather than the other way around. It’s probably too much to ask for, but it’s an interesting thought.